Over the past year-and-a-half, artificial intelligence has been enjoying its mainstream breakthrough.

Over the past year-and-a-half, artificial intelligence has been enjoying its mainstream breakthrough.

The instant success of ChatGPT and other AI-based tools and services kick-started what many believe is a new revolution.

By now it is clear that AI offers endless possibilities. While there’s no shortage of new avenues to pursue, most applications rely on input or work from users. That’s fine for the tech-savvy, but some prefer instant results.

For example, we have experimented with various AI-powered image-generation tools to create stock images to complement our news articles. While these all work to some degree, it can be quite a challenge to get a good output; not to mention that it costs time and money too.

StockCake

But what if someone created a stock photo website pre-populated with over a million high-quality royalty-free images? And what if we could freely use the photos from that site because they’re all in the public domain? That would be great.

Enter:

StockCake

…

StockCake is a new platform by AI startup Imaginary Machines. The site currently hosts more than a million pre-generated images. These images can be downloaded, used, and shared for free. There are no strings attached as all photos are in the public domain.

AI-generated public domain photos

A service like this isn’t of much use to people who aim to generate completely custom images or photos. All content is pre-made and there is no option to alter the prompts. Instead, the site is aimed at people who want instant stock images for their websites, social media, or any other type of presentation.

Using AI to Democratize Media

TorrentFreak spoke with StockCake founder Nen Fard to find out what motivated him to start this project and how he plans to develop it going forward. He told us that it’s long been a dream to share media freely online with anyone who needs it.

“My journey towards leveraging AI for media content began with a keen interest in the field’s rapid advancements. The defining moment came when I observed a significant leap in the quality of AI-generated content, reaching a standard that was not just acceptable but impressive.

“This realization was pivotal, sparking the transition from ideation to action. It underscored the feasibility of using AI to fulfill a long-held dream of democratizing media content, leading to the birth of StockCake,” Fard adds.

The careful reader will pick up that Fard’s responses were partly edited using AI technology. However, the message is clear, Fard saw the potential to create a vast library of stock photos and added these to the public domain, free to the public at large. And it didn’t stop there.

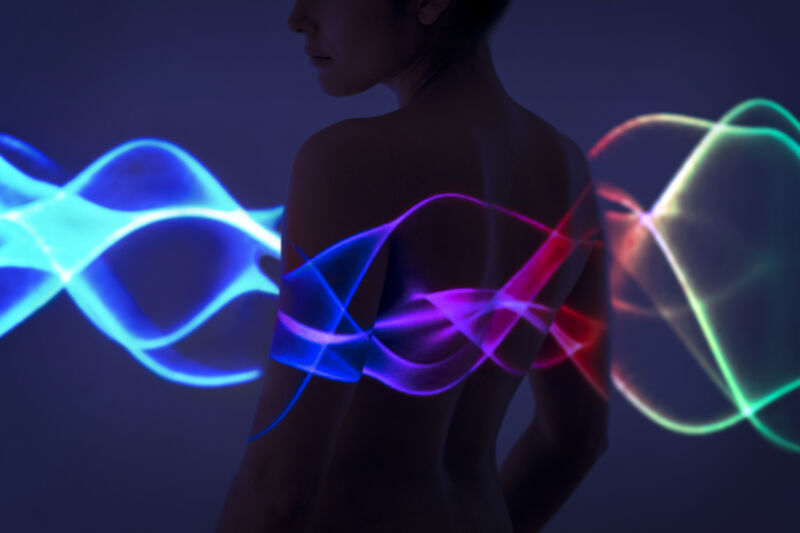

StockTune

Shortly after releasing StockCake, Fard went live with another public domain project;

StockTune

. This platform is pretty much identical but focuses on audio instead. The tracks that are listed can be used free of charge and without attribution.

StockTune

It’s not hard to see how these two sites can replace the basic use of commercial stock footage platforms. While they are still in their infancy, the sites already offer quite a decent quality selection. At the same time, there are also various AI filters in place to ensure that inappropriate content is minimized.

The AI technology, which is in part based on OpenAI and Stability AI, also aims to ensure that the underlying models are legitimate. While there are always legal issues that can pop up, both services strive to play fair, so they can continue to grow, perhaps indefinitely.

Ever-Expanding Libraries

At the time of writing, StockCake has a little over a million photos hosted on the site, while there are nearly 100,000 tracks on StockTune. This is just the beginning, though, as AI generates new versions every minute, then adds them to the site if the quality is on par.

Theoretically, there’s no limit to the number of variations that can be created. While quality is leading, the founder’s vision has always been to create unrestricted access to media. This means that the libraries are ever-expanding.

“The inception of StockCake and StockTune was driven by a vision to revolutionize the accessibility of media content. Unlike traditional platforms, we leverage the limitless potential of AI to create an ever-expanding, diverse set of photos and songs,” Fard says.

Both stock media sites have something suitable for most general topics. However, you won’t find very specific combinations, such as a “

squirrel playing football.

” AI-rendered versions of some people,

Donald Trump

, for example, appear to be off limits too.

Monetizing the Public Domain?

While the above all sounds very promising, the sites are likely to face plenty of challenges. The platforms are currently not monetized but the AI technology and hosting obviously cost money, so this will have to change.

Fard tells us that he plans to keep access to the photos and audio completely free. However, he’s considering options to generate revenue. Advertising would be one option, but more advanced subscription-based services are too. Or to put it in his AI-amplified words;

“As our platforms continue to grow and evolve, they will naturally give rise to opportunities that support our sustainability without compromising our values. We aim to foster a community where creativity is unrestrained by financial barriers, and every advancement brings us closer to this goal,” Fard says.

For example, the developer plans to launch a suite of AI-powered tools for expert users, to personalize and upscale images when needed. That could be part of a paid service. However, existing footage will remain in the public domain, without charge, he promises.

“Looking ahead, we plan to introduce a suite of AI-powered tools that promise to enhance the creative possibilities for our users significantly. These include upscaling tools for generating higher-resolution photos, style transfer tools that can adapt content to specific artistic aesthetics, and character/object replacement tools for personalized customization.”

The C Word

It’s remarkable that a small startup can create this vast amount of stock footage and share it freely. This may also spook some of the incumbents, who make millions of dollars from their stock photo platforms. While these can’t stop AI technology, they can complain. And they do.

For example, last year, Getty Images sued Stability AI, alleging that it used its stock photos to train its models. This lawsuit remains ongoing. While Fard doesn’t anticipate any legal trouble, he has some thoughts on the copyright implications.

“At Imagination Machines, the driving force behind StockCake and StockTune, we believe that the essence of creativity and innovation should be accessible to all. This belief guides our approach to AI-generated media, which, by its nature, challenges traditional notions of copyright,” Fard says.

The site’s developer trusts that the company’s AI partners respect existing copyright law. And by putting all creations in the public domain, the company itself claims no copyrights.

“Currently, AI-generated content resides in a unique position within copyright laws. These laws were crafted in a different era, focusing on human authorship as the cornerstone of copyright eligibility. However, the remarkable capabilities of AI to generate original, high-quality photos and music without direct human authorship put us at the edge of a new frontier.

“We operate under the current legal framework, which does not extend copyright protection to works created without human ingenuity, allowing us to offer this content in the public domain.”

Both StockCake and StockTune have the potential to be disruptors, but Fard wants to play fair and remain within the boundaries of the law. He also understands that the law may change in the future, and plans to have his voice heard in that debate.

“Our goal is not just to navigate the current legal issues but also to actively advocate for laws that recognize the potential of AI to democratize access to creative content while respecting the rights and contributions of human creators,” Fard concludes.

With AI legal battles and copyright policy revving up globally, there’s certainly plenty of opportunity to advocate.

From:

TF

, for the latest news on copyright battles, piracy and more.

chevron_right

chevron_right

Over the past year-and-a-half, artificial intelligence has been enjoying its mainstream breakthrough.

Over the past year-and-a-half, artificial intelligence has been enjoying its mainstream breakthrough.

Starting last year, various rightsholders have filed lawsuits against companies that develop AI models.

Starting last year, various rightsholders have filed lawsuits against companies that develop AI models.