-

chevron_right

chevron_right

Quantum error correction used to actually correct errors

news.movim.eu / ArsTechnica · Wednesday, 3 April - 15:08 · 1 minute

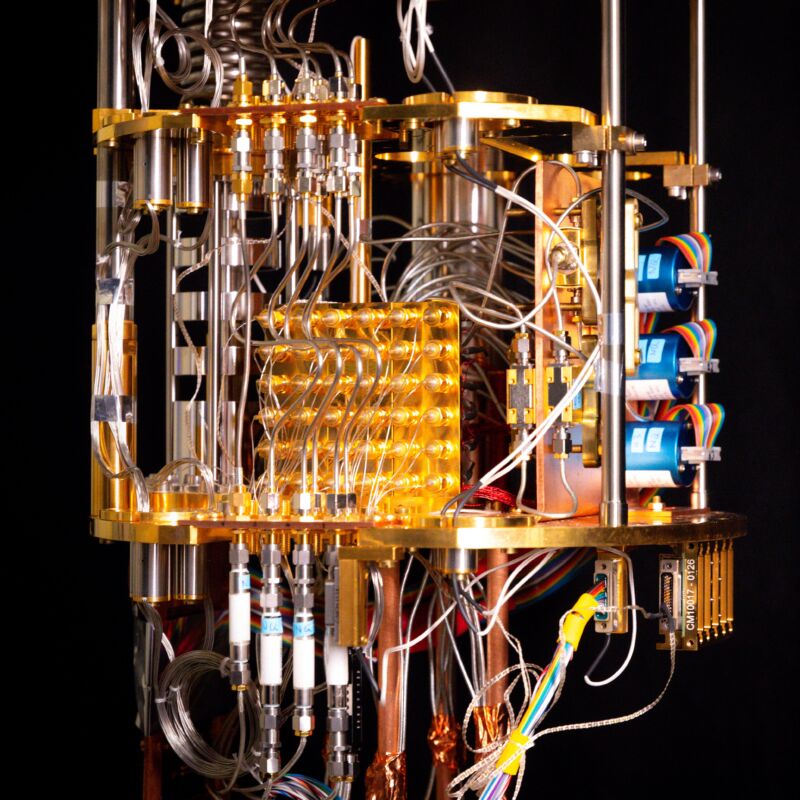

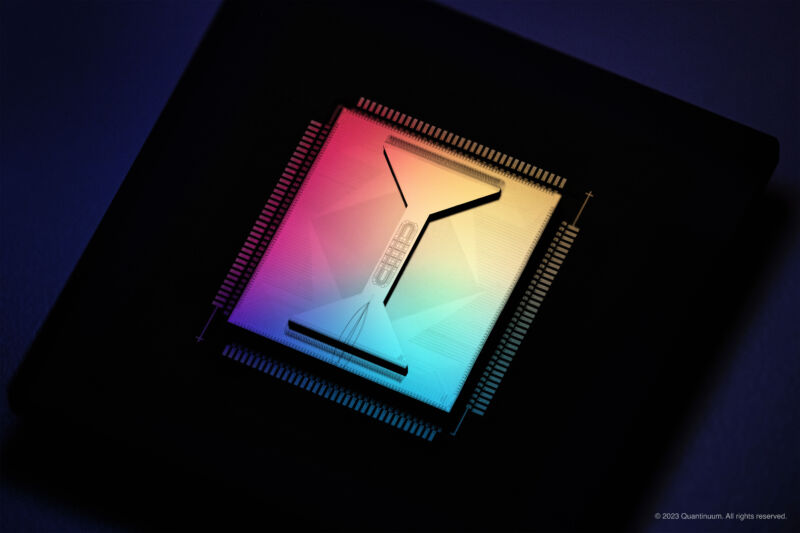

Enlarge / Quantinuum's H2 "racetrack" quantum processor. (credit: Quantinuum)

Today's quantum computing hardware is severely limited in what it can do by errors that are difficult to avoid. There can be problems with everything from setting the initial state of a qubit to reading its output, and qubits will occasionally lose their state while doing nothing. Some of the quantum processors in existence today can't use all of their individual qubits for a single calculation without errors becoming inevitable.

The solution is to combine multiple hardware qubits to form what's termed a logical qubit. This allows a single bit of quantum information to be distributed among multiple hardware qubits, reducing the impact of individual errors. Additional qubits can be used as sensors to detect errors and allow interventions to correct them. Recently, there have been a number of demonstrations that logical qubits work in principle .

On Wednesday, Microsoft and Quantinuum announced that logical qubits work in more than principle. "We've been able to demonstrate what's called active syndrome extraction, or sometimes it's also called repeated error correction," Microsoft's Krysta Svore told Ars. "And we've been able to do this such that it is better than the underlying physical error rate. So it actually works."