-

chevron_right

chevron_right

Nvidia unveils Blackwell B200, the “world’s most powerful chip” designed for AI

news.movim.eu / ArsTechnica · Tuesday, 19 March - 15:27 · 1 minute

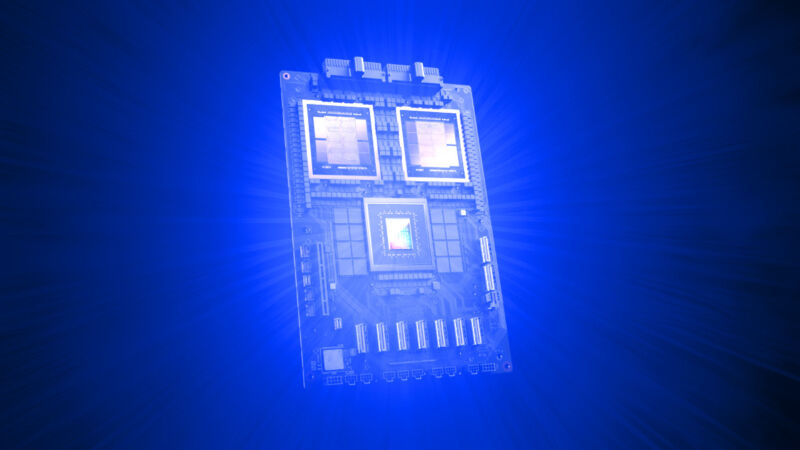

Enlarge / The GB200 "superchip" covered with a fanciful blue explosion that suggests computational power bursting forth from within. The chip does not actually glow blue in reality. (credit: Nvidia / Benj Edwards)

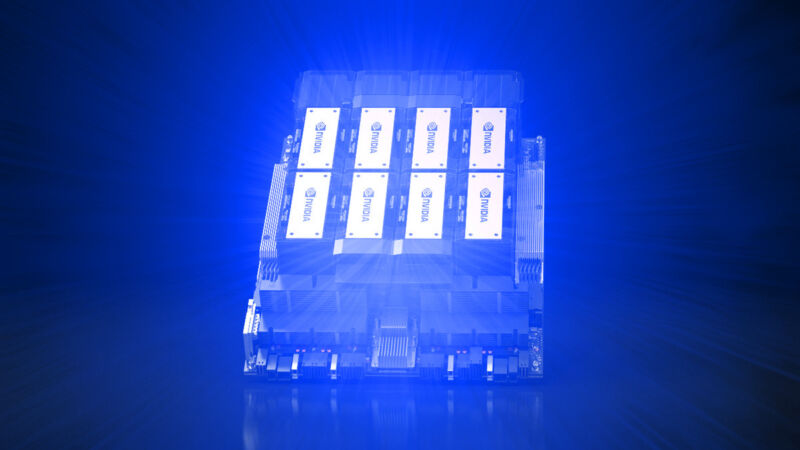

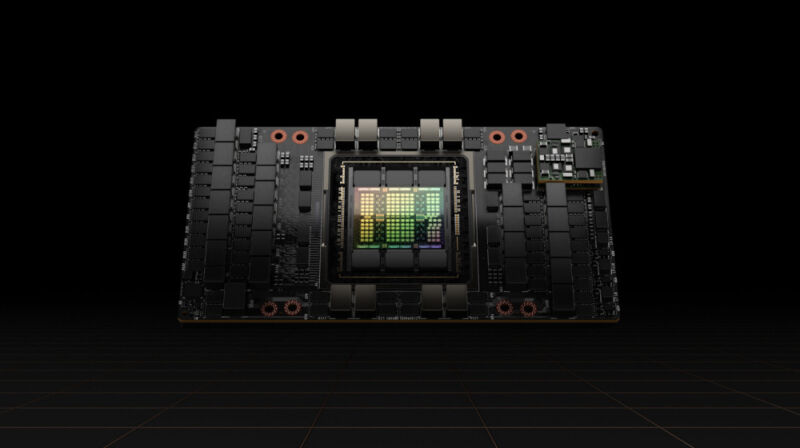

On Monday, Nvidia unveiled the Blackwell B200 tensor core chip—the company's most powerful single-chip GPU, with 208 billion transistors—which Nvidia claims can reduce AI inference operating costs (such as running ChatGPT ) and energy consumption by up to 25 times compared to the H100 . The company also unveiled the GB200, a "superchip" that combines two B200 chips and a Grace CPU for even more performance.

The news came as part of Nvidia's annual GTC conference, which is taking place this week at the San Jose Convention Center. Nvidia CEO Jensen Huang delivered the keynote Monday afternoon. "We need bigger GPUs," Huang said during his keynote. The Blackwell platform will allow the training of trillion-parameter AI models that will make today's generative AI models look rudimentary in comparison, he said. For reference, OpenAI's GPT-3, launched in 2020, included 175 billion parameters. Parameter count is a rough indicator of AI model complexity.

Nvidia named the Blackwell architecture after David Harold Blackwell , a mathematician who specialized in game theory and statistics and was the first Black scholar inducted into the National Academy of Sciences. The platform introduces six technologies for accelerated computing, including a second-generation Transformer Engine, fifth-generation NVLink, RAS Engine, secure AI capabilities, and a decompression engine for accelerated database queries.