-

chevron_right

chevron_right

IA – Des biais de genre qui font froid dans le dos !

news.movim.eu / Korben · 7 days ago - 23:30 · 4 minutes

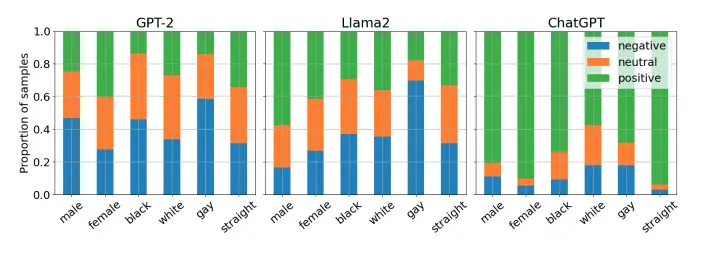

Malheureusement, j’ai une nouvelle qui va vous faire bondir de votre canapé ! 😱 Figurez-vous que nos chers modèles de langage d’IA, là, genre GPT-4, GPT-3, Llama 2 et compagnie, eh ben ils sont bourrés de biais de genre ! Si si, et c’est pas moi qui le dis, c’est l’UNESCO qui vient de sortir une étude là-dessus.

Cette étude, menée par des chercheurs de l’University College London (UCL) et de l’UNESCO, a fait de l’analyse de contenu pour repérer les stéréotypes de genre, des tests pour voir si les IA étaient capables de générer un langage neutre, de l’analyse de diversité dans les textes générés, et même de l’analyse des associations de mots liées aux noms masculins et féminins.

Bref, ils ont passé les modèles au peigne fin et les résultats piquent les yeux. Déjà, ces IA ont une fâcheuse tendance à associer les noms féminins à des mots comme « famille », « enfants », « mari » , bref, tout ce qui renvoie aux stéréotypes de genre les plus éculés . Pendant ce temps-là, les noms masculins, eux, sont plus souvent associés à des termes comme « carrière », « dirigeants », « entreprise »… Vous voyez le tableau quoi. 🙄

Et attendez, ça ne s’arrête pas là ! Quand on demande à ces IA d’écrire des histoires sur des personnes de différents genres, cultures ou orientations sexuelles, là aussi ça part en vrille. Par exemple, les hommes se retrouvent bien plus souvent avec des jobs prestigieux genre « ingénieur » ou « médecin » , tandis que les femmes sont reléguées à des rôles sous-valorisés ou carrément stigmatisés, genre « domestique », « cuisinière » ou même « prostituée » ! On se croirait revenu au Moyen-Âge !

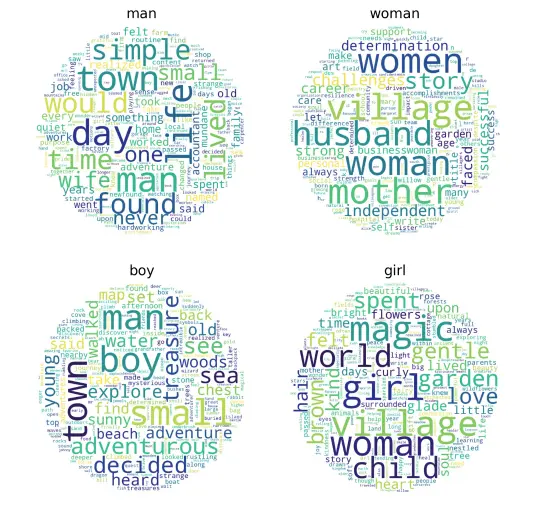

Tenez, un exemple frappant avec Llama 2 : dans les histoires générées, les mots les plus fréquents pour les garçons et les hommes c’est « trésor », « bois », « mer », « aventureux », « décidé »… Alors que pour les femmes, on a droit à « jardin », « amour », « doux », « mari »…et le pire, c’est que les femmes sont décrites quatre fois plus souvent dans des rôles domestiques que les hommes .

Nombreux sont les gens de la tech qui se battent pour plus de diversité et d’égalité dans ce milieu , et voilà que les IA les plus avancées crachent à la gueule de tous des clichés dignes des années 50 ! Il est donc grand temps de repenser en profondeur la façon dont on développe ces technologies parce que là, non seulement ça perpétue les inégalités , mais en plus ça risque d’avoir un impact bien réel sur la société vu comme ces IA sont de plus en plus utilisées partout !

Alors ok, y a bien quelques boîtes qui arrivent mieux à limiter la casse, mais globalement, c’est la cata. Et le pire, c’est que ces biais viennent en grande partie des données utilisées pour entraîner les IA , qui deviennent à leur tour ce reflet de tous les stéréotypes et discriminations bien ancrés dans notre monde…

Mais attention, faut pas tomber dans le piège de dire que ces IA sont volontairement biaisées ou discriminatoires hein. En fait, ce sont juste des systèmes hyper complexes qui apprennent à partir des données sur lesquelles on les entraîne. Donc forcément, si ces données sont elles-mêmes biaisées, et bien les IA vont refléter ces biais. C’est pas qu’elles cherchent à discriminer, c’est juste qu’elles reproduisent ce qu’elles ont « appris ».

Mais bon, faut pas désespérer non plus hein. Déjà, des études comme celle de l’UNESCO, ça permet de mettre en lumière le problème et de sensibiliser l’opinion et les décideurs et puis surtout, il y a des pistes de solutions qui émergent. Les chercheurs de l’UNESCO appellent par exemple à renforcer la diversité et l’inclusivité des données d’entraînement , à mettre en place des audits réguliers pour détecter les biais, à impliquer davantage les parties prenantes dans le développement des IA, ou encore à former le grand public aux enjeux… Bref, tout un tas de leviers sur lesquels on peut jouer pour essayer de rééquilibrer la balance !

Alors voilà, je voulais partager ça avec vous parce que je trouve que c’est un sujet super important, qui nous concerne tous en tant que citoyens du monde numérique. Il est crucial qu’on garde un œil vigilant sur ces dérives éthiques et qu’on se batte pour que l’IA soit développée dans le sens du progrès social et pas l’inverse. Parce que sinon, on court droit à la catastrophe, et ça, même le plus optimiste des Bisounours ne pourra pas le nier !

N’hésitez pas à jeter un coup d’œil à l’étude de l’UNESCO , elle est super intéressante et surtout, continuez à ouvrir vos chakras sur ces questions d’éthique IA, parce que c’est un défis majeurs qui nous attend.

Allez, sur ce, je retourne binge-watcher l’intégrale de Terminator en espérant que ça ne devienne pas un documentaire… Prenez soin de vous les amis, et méfiez-vous des machines ! Peace ! ✌️