-

chevron_right

chevron_right

AI model from OpenAI automatically recognizes speech and translates it to English

news.movim.eu / ArsTechnica · Thursday, 22 September, 2022 - 16:48

Enlarge (credit: Benj Edwards / Ars Technica)

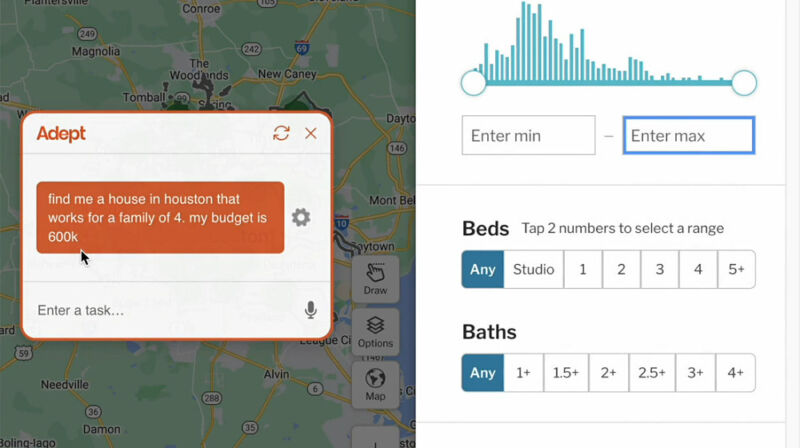

On Wednesday, OpenAI released a new open source AI model called Whisper that recognizes and translates audio at a level that approaches human recognition ability. It can transcribe interviews, podcasts, conversations, and more.

OpenAI trained Whisper on 680,000 hours of audio data and matching transcripts in approximately 10 languages collected from the web. According to OpenAI, this open-collection approach has led to "improved robustness to accents, background noise, and technical language." It can also detect the spoken language and translate it to English.

OpenAI describes Whisper as an encoder-decoder transformer , a type of neural network that can use context gleaned from input data to learn associations that can then be translated into the model's output. OpenAI presents this overview of Whisper's operation: