Today is my last day of vacation for this summer.

I had more or less important stuff planned, but yesterday I learnt about

stable diffusion

, "a machine-learning model to generate digital images from natural language descriptions", which happens

to be open source,

including its trained weights

.

It's also pretty unfiltered, making it possible to generate so-called "deep fakes", erotica

and/or using trademarked things, for extra fun.

Being the nerd that I am, I couldn't help but trying it out today and ended up doing that in a couple hours:

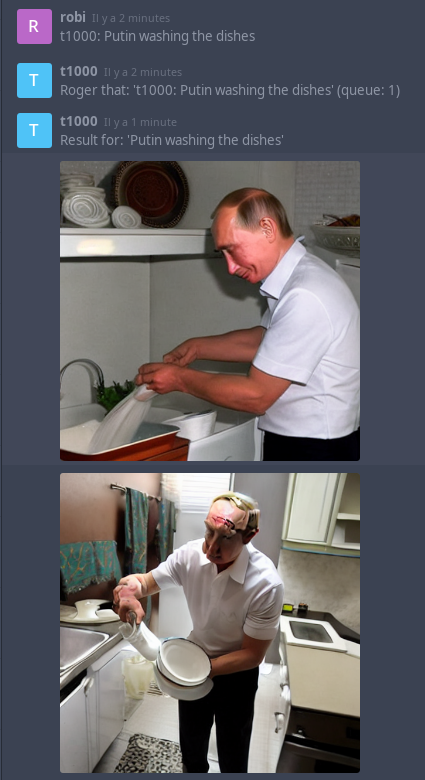

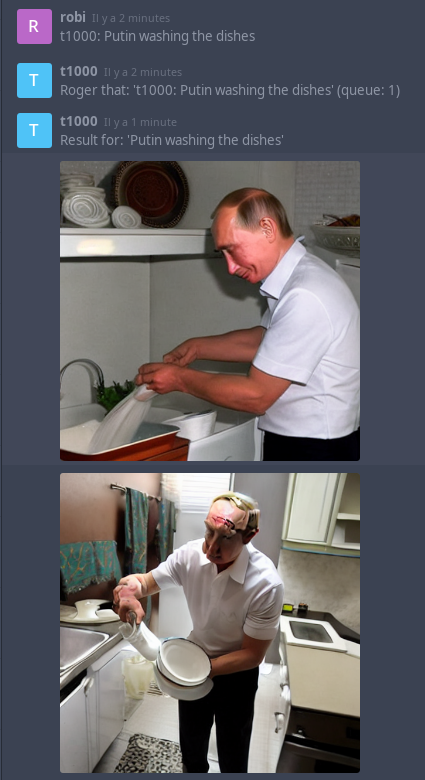

What do we see on this image? A screenshot of

Dino

showing an

XMPP group chat, with a bot named t1000, and my dad (robi) prompting the bot

to generate an image (well, 2 actually).

Since this type of bot is quite fun to play with, I thought it would be nice to share how I did this.

Disclaimer: this is a very quick'n'dirty method to get this bot running, it's (very, very) far from being

as advanced as this "similar" (hum)

discord bot

.

Generating images from the command line

Before even thinking about a bot, I needed to try to generate some images "the hard way".

It turned out to be pretty simple and I expected this part to be a lot more painful and time-consuming.

I followed the instructions from

the official github repo

,

which included downloading the trained weights from

hugging face

.

Unfortunately, it turned out that a RTX 3080 Ti with its 12GB

of VRAM was not enough for this base version,

but a quick look at

this github issue

made me land on

this fork

which happens to have scripts for GPUs with only 4GB of VRAM.

There are probably a lot of other alternatives to generate images

on limited hardware, but this one worked for me, so I did not look further.

After copying the

./optimizedSD

folder in the stable diffusion original source, I could run inferences like that:

python optimizedSD/optimized_txt2img.py \

--prompt "Luffy with a guitar" \

--H 512 --W 512 \

--seed 27 \

--n_iter 2 \

--n_samples 10 \

--ddim_steps 50

which filled a folder with these images in about 3 minutes:

The actual bot

There are about a million things that are wrong with this implementation, but hey,

it let my non-techie friends play with this crazy shit, so my goal was met.

import asyncio

import logging

import random

import tempfile

import time

import re

from argparse import ArgumentParser

from pathlib import Path

import aiohttp

import slixmpp

from slixmpp import JID

# This is where you git cloned stable diffusion

CWD = Path("/home/nicoco/tmp/stable-diffusion/")

# This is the command you used to generate images.

# Here we only generate 4 images by prompt.

CMD_TXT2IMG = [

"/home/nicoco/.local/miniconda3/envs/ldm/bin/python",

"/home/nicoco/tmp/stable-diffusion/optimizedSD/optimized_txt2img.py",

"--H", # height and weights must be multiples of 64

"512", # more pixels = more VRAM needed

"--W",

"512",

"--n_iter", # n_output_img = n_iter x n_samples

"1", # n_samples = faster, but more VRAM

"--n_samples",

"4",

"--ddim_steps",

"50",

"--turbo", # remove these

"--unet_bs", # 3 last lines

"4", # for lower VRAM usage

]

CMD_IMG2IMG = [

"/home/nicoco/.local/miniconda3/envs/ldm/bin/python",

"/home/nicoco/tmp/stable-diffusion/optimizedSD/optimized_img2img.py",

"--H", # height and weights must be multiples of 64

"512", # more pixels = more VRAM needed

"--W",

"512",

"--n_iter", # n_output_img = n_iter x n_samples

"1", # n_samples = faster, but more VRAM

"--n_samples",

"4",

"--ddim_steps",

"50",

"--turbo", # remove these

"--unet_bs", # 3 last lines

"4", # for lower VRAM usage

]

async def worker(bot: "MUCBot"):

# This will process requests one after the other, because

# chances are you can only run one at a time with a

# consumer-grade GPU.

# Adapted from the python docs: https://docs.python.org/3/library/asyncio-queue.html

q = bot.queue

while True:

msg = await q.get()

start = time.time()

# this should be replaced with a proper regex…

prompt = re.sub(f"^{bot.nick}(.)", "", msg["body"]).strip()

# let's call an external process that spawns

# another python interpreter. quick and dirty, remember?

try:

url = bot.images_waiting_for_prompts.pop(msg["from"])

except KeyError:

proc = await asyncio.create_subprocess_exec(

*CMD_TXT2IMG,

"--prompt",

prompt,

"--seed",

str(random.randint(0, 1_000_000_000)), # random=fun

stdout=asyncio.subprocess.PIPE,

stderr=asyncio.subprocess.PIPE,

cwd=CWD,

)

stdout, stderr = await proc.communicate()

else:

with tempfile.NamedTemporaryFile() as f:

async with aiohttp.ClientSession() as session:

async with session.get(url) as r:

f.write(await r.read())

proc = await asyncio.create_subprocess_exec(

*CMD_IMG2IMG,

"--prompt",

prompt,

"--seed",

str(random.randint(0, 1_000_000_000)), # random=fun

"--init-img",

f.name,

stdout=asyncio.subprocess.PIPE,

stderr=asyncio.subprocess.PIPE,

cwd=CWD,

)

stdout, stderr = await proc.communicate()

print(stdout.decode()) # print, the best debugger ever™

print(stderr.decode())

# This retrieves the directory where the images were written

# from the process's stdout. Yes, it is very ugly.

output_dir = CWD / stdout.decode().split("\n")[-3].split()[-1]

print(output_dir)

q.task_done()

bot.send_message(

mto=msg["from"].bare,

mbody=f"Result for: '{prompt}' (took {round(time.time() - start)} seconds)",

mtype="groupchat",

)

for f in output_dir.glob("*"):

if f.stat().st_mtime < start:

continue # only upload latest generated images

url = await bot["xep_0363"].upload_file(filename=f)

reply = bot.make_message(

mto=msg["from"].bare,

mtype="groupchat",

)

# this lines are required to make the Conversations

# Android XMPP client actually display the image in

# the app, and not just a link

reply["oob"]["url"] = url

reply["body"] = url

reply.send()

# This part is basically just the slixmpp example MUCbot with some

# minor changes

class MUCBot(slixmpp.ClientXMPP):

def __init__(self, jid, password, rooms: list[str], nick):

slixmpp.ClientXMPP.__init__(self, jid, password)

self.queue = asyncio.Queue()

self.rooms = rooms

self.nick = nick

self.add_event_handler("session_start", self.start)

self.add_event_handler("groupchat_message", self.muc_message)

self.images_waiting_for_prompts = {}

async def start(self, _event):

await self.get_roster()

self.send_presence()

for r in self.rooms:

await self.plugin["xep_0045"].join_muc(JID(r), self.nick)

asyncio.create_task(worker(self))

async def muc_message(self, msg):

if msg["mucnick"] != self.nick:

if msg["body"].lower().startswith(self.nick):

await self.queue.put(msg)

self.send_message(

mto=msg["from"].bare,

mbody=f"Roger that: '{msg['body']}' (queue: {self.queue.qsize()})",

mtype="groupchat",

)

elif url := msg["oob"]["url"]:

self.send_message(

mto=msg["from"].bare,

mbody=f"OK, what should I do with this image?",

mtype="groupchat",

)

self.images_waiting_for_prompts[msg["from"]] = url

if __name__ == "__main__":

parser = ArgumentParser()

parser.add_argument(

"-q",

"--quiet",

help="set logging to ERROR",

action="store_const",

dest="loglevel",

const=logging.ERROR,

default=logging.INFO,

)

parser.add_argument(

"-d",

"--debug",

help="set logging to DEBUG",

action="store_const",

dest="loglevel",

const=logging.DEBUG,

default=logging.INFO,

)

parser.add_argument("-j", "--jid", dest="jid", help="JID to use")

parser.add_argument("-p", "--password", dest="password", help="password to use")

parser.add_argument(

"-r", "--rooms", dest="rooms", help="MUC rooms to join", nargs="*"

)

parser.add_argument("-n", "--nick", dest="nick", help="MUC nickname")

args = parser.parse_args()

logging.basicConfig(level=args.loglevel, format="%(levelname)-8s %(message)s")

xmpp = MUCBot(args.jid, args.password, args.rooms, args.nick)

xmpp.register_plugin("xep_0030") # Service Discovery

xmpp.register_plugin("xep_0045") # Multi-User Chat

xmpp.register_plugin("xep_0199") # XMPP Ping

xmpp.register_plugin("xep_0363") # HTTP upload

xmpp.register_plugin("xep_0066") # Out of band data

xmpp.connect()

xmpp.process()

After installing

slixmpp

and

aiohttp

using your favorite python environment isolation tool,

You can then launch it with:

python bot.py \

-j bot@example.com \ # XMPP account of the bot

-p XXX \ # password

-r room1@conference.example.com room2@conference.example.com \

-n t1000 # nickname

The bot can join several rooms, so you can make one that you can show your mother,

and another one for your degenerated friends.

Future plans

None! I don't plan to make this a more sophisticated bot.

But maybe I'll just make it generate a little more than 2 images at once, since

generating 2 images takes about 1 minute and generating 20 images takes

about 3 minutes, there is probably a better middle ground.

Especially because so many of the output images are just crap!

But I should get back to paid work now.

EDIT (2022/09/01): added img2img early in the morning because it is even more fun,

changed the number of output images to 4 and tuned a few parameters to take

advantage of my beefy GPU.

Now, no more!

chevron_right

chevron_right

movim.png)

movim.png)