I watched this year's

Assembly

democompo live,

as I usually do—or more precisely, I watched it on stream. I had heard

that ASD would return with a demo for the first time in five years,

and

The legend of Sisyphus

did not disappoint. At all. (No, it's not written in assembly; Assembly

is the name of the party. :-) )

I do fear that this is the last time we will see such a “blockbuster” demo

(meaning, roughly: a demo that is a likely candidate for best high-end

demo of the year) at Assembly, or even any non-demoscene-specific party;

the scene is slowly retracting into itself, choosing to clump together in

their (our?) own places and seeing the presence others mainly as an annoyance.

But I digress.

Anyway, most demos these days are watched through video captures; even though

Navis has done a remarkable job of making it playable on not-state-of-the-art

GPUs (the initial part even runs quite well on my Intel embedded GPU,

although it just stops working from there), there's something about the

convenience. And for my own part, I don't even have Windows, so realtime

is mostly off-limits anyway. And this is a demo that really hates your

encoder; lots of motion, noise layers, sharp edges that move around

erratically. So even though there are some decent YouTube captures out there

(and YouTube's encoder does a surprisingly good job in most parts),

I wanted to make a truly

good

capture. One that gives you the real feeling

of watching the demo on a high-end machine, without the inevitable blocking

you get from a bitrate-constrained codec in really difficult situations.

So, first problem is actually getting a decent capture; either completely

uncompressed (well, losslessly compressed), or so lightly compressed that

it doesn't really matter.

neon

put in

a lot of work here with his 4070 Ti machine, but it proved to be quite

difficult. For starters, it wouldn't run in

.kkapture

at all (I don't know the details). It ran under

Capturinha

,

but in the most difficult places, the H.264 bitstream would simply be

invalid, and a HEVC recording would not deliver frames for many seconds.

Also, the recording was sometimes out-of-sync even though the demo itself

played just fine (which we could compensate for, but it doesn't feel

risk-free). A HDMI capture in 2560x1440 was problematic for other reasons

involving EDIDs and capture cards.

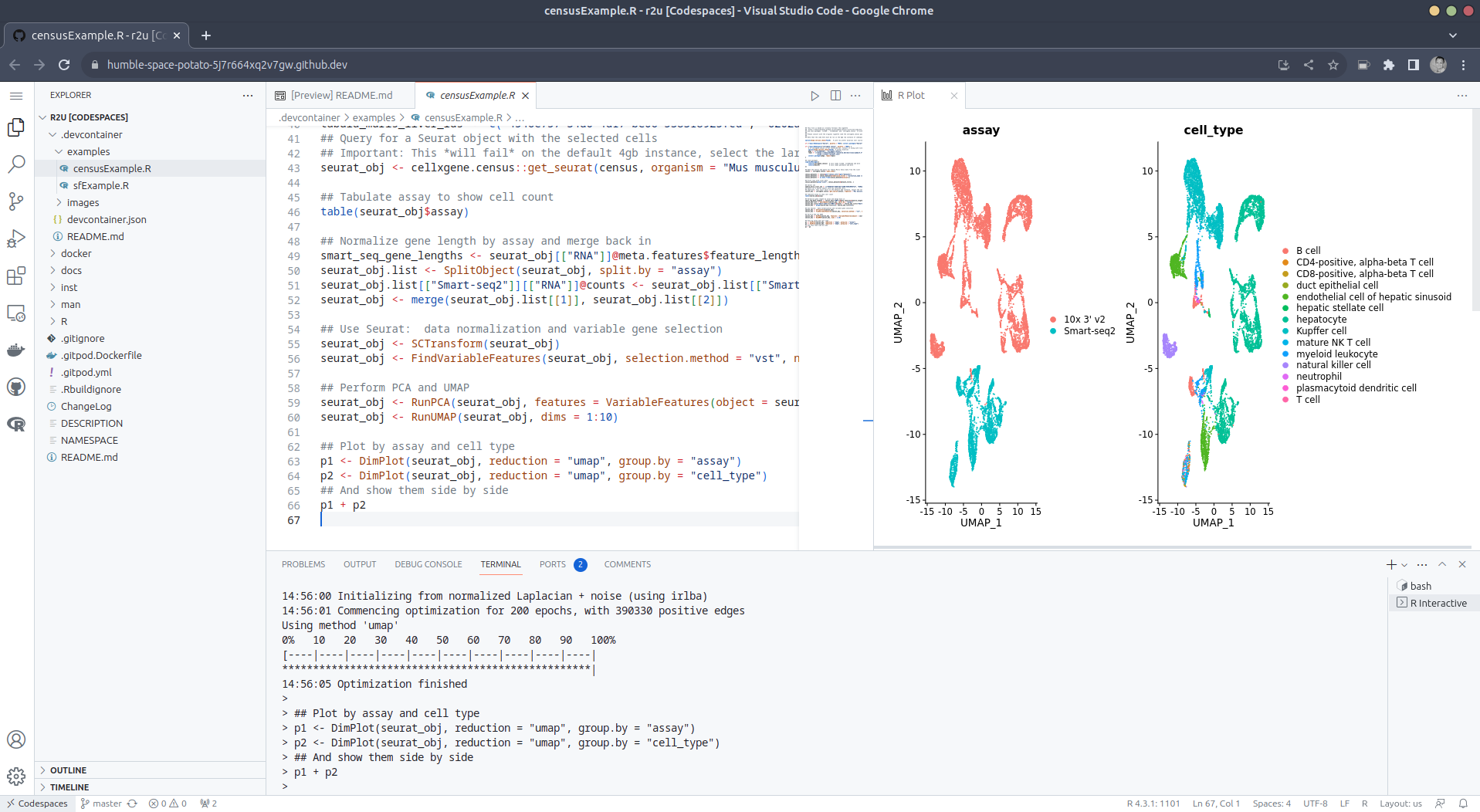

So I decided to see if I could peek inside it to see if there's a recording

mode in the .exe; I mean, they usually upload their own video versions to

YouTube, so it would be natural to have one, right? I did find a lot of

semi-interesting things (though I didn't care to dive into the decompression code,

which I guess is a lot of what makes the prod work at all), but it was also

clear that there was no capture mode. However, I've done one-off renders

earlier, so perhaps this was a good chance?

But to do that, I would have to go from square one back to square

zero

,

which is to have it run at all on my machine. No Windows, remember, and the

prod wouldn't run at all in WINE (complaints about msvcp140.dll). Some

fiddling with installing the right runtime through

winetricks

made it run (but do remember

to use vcrun2022 and not vcrun2019, even though the DLL names are the same…),

but everything was black. More looking inside the .exe revealed that this is

probably an

SDL

issue; the code creates an

“SDL renderer” (a way to software-render things onto the window), which

works great on native Linux and on native Windows, but somehow not in WINE.

But the renderer is never ever used for anything, so I could just nop out

the call, and voila! Now I could see the dialog box. (Hey Navis, could you

take out that call for the next time? You don't need BASS_Start either

as long as you haven't called BASS_Stop. :-) ) The precalc is

super-duper-slow under WINE due to some CPU usage issue; it seems there is

maybe a lot of multi-threaded memory allocation that's not very fast on the WINE side.

But whatever, I could wait those 15 minutes or so, and after that, the demo

would actually run perfectly!

Next up would be converting my newfound demo-running powers into a render.

I was a bit disappointed to learn that WINE doesn't contain any special

function hook machinery (you would think these things are both easier and

more useful in a layered implementation like that, right?), so I went for

the classic hacky way of making a proxy DLL that would pretend to be some

of the utility DLLs the program used, forwarding some calls and intercepting

others. We need to intercept the SwapBuffers call, to save each frame to

disk, and some timing functions, so that we can get perfect frame pacing

with no drops, no matter how slow or fast our machine is. (You can call

this a poor man's .kkapture, but my use of these techniques actually predates

.kkapture by quite a bit. I was happy he made something more polished back

in the day, though, as I don't want to maintain hacky Windows-only software

forever.)

Thankfully for us, the strings “SDL2.dll” and “BASS.dll” are exactly the same

length, so I hexedited both to say “hook.dll” instead, which supplied the

remaining functions. SwapBuffers was easy; just do glReadPixels() and write

the frame to disk. (I assumed I would need something faster with asynchronous

readback to a PBO eventually, and probably also some PNG compression to save

on I/O, but this was ludicrously fast as it was, so I never needed to change

it.) Timing was trickier; the demo seems to use both _Xtick_get_time()

(an internal MSVC timing function; I'd assume what Navis wrote was

std::chrono, and then it got inlined into that call)

and

BASS. Every frame,

it seems to compare those two timers, and then adjust its frame pacing

to be a little faster or slower depending on which one is ahead. (Since its

only options are delta * 0.97 or delta * 1.03, I'd guess it cannot ever

run perfectly without jitter?) If it's more than 50 ms away from BASS,

it even seeks the MP3 to match the real time! (I've heard complaints from

some that the MP3 is skipping on their system, and I guess this is why.)

I don't know exactly why this is done, but I'd guess there are some physical

effects that can only run “from the start” (i.e., there is feedback from

the previous frame) and isn't happy about too-large timesteps, so that

getting a reliable time delta for each frame is important.

Anyhow, this means it's not enough to hook BASS' timer functions, but we also

need _Xtick_get_time() to give the same value (namely our current

frame number divided by 59.94, suitably adjusted for units). This was a bit

annoying, since this function lives in a third library, and I wasn't up for

manually proxying all of the MSVC runtime. After some mulling, I found an

unused SDL import (showing a message box), repurposed it to be _Xtick_get_time()

and simply hexedited the 2–3 call sites to point to that import. Easy peasy,

and the demo rendered perfectly to 300+ gigabytes of uncompressed 1440p

frames without issue. (Well, I had an overflow issue at one point that caused

the demo to go awry half-way, so I needed two renders instead of one. But this

was refreshingly smooth.)

I did consider hacking the binary to get an actual 2160p capture; Navis has

been pretty clear that it looks better the more resolution you have, but it

felt like tampering with his art in a disallowed way. (The demo gives you the

choice between 720p, 1080p, and 1440p. There's also a “safe for work”

option, but I'm not entirely sure what it actually does!)

That left only the small matter of the actual encoding, or, the entire point

of the exercise in the first place. I had already experimented a fair bit

with this based on neon's captures, and had realized the main challenge is

to keep up the image quality while still having a file that people can

actually play, which is much trickier than I assumed. I originally wanted to

use 10-bit

AV1

, but even with

dav1d

, the players I tried could

not reliably keep up the 1440p60 stream without dropping lots of frames.

(It seemed to be somewhat single-thread bound, actually. mpv used 10% of that

thread on updating its OSD, which sounded sort of wasted given that I didn't

have it enabled.) I tried various 8- and 10-bit encodes with both

aomenc

and

SVT-AV1

, and it just wasn't going

well, so I had to abandon it. The point of this exercise, after all, is to

be able to conveniently view the demo in high quality without having a monster machine. (The AV1 common knowledge seems to be that you

should use

Av1an

as a wrapper to

save you a lot of pain, but it doesn't have prebuilt Linux binaries or

packages, depends on a zillion Rust crates and instantly segfaulted on

startup for me. I doubt it would affect the main issue much anyway.)

So I went a step down, to 10-bit HEVC, with an added bonus of a bit wider

hardware support. (I know I wanted 10-bit, since 8-bit is frequently having

issues in gradient-heavy content such as this, and I probably needed every

bit of fidelity I could get anyway, assuming decoding could keep up.)

I used Level 5.2 Main10 as a rough guide; it's what Quick Sync supports,

and I would assume hardware UHD players can also generally deal with it.

Level 5.2 (without the High tier), very roughly, caps the bitrate at maximum 60 Mbit/sec

(I was generally using CRF encodes, but the max cap needed to come on top of

that). Of course, if you just tell the encoder that 60 is max, it will

happily “save up” bytes during the initial black segment (or generally,

during anything that is easy) and then burst up to 500 Mbit/sec for a

brief second when the cool explosions happen, so that's obviously out of

the question—which means there are also buffer limitations (again very roughly,

the rules say you can only use 60 Mbit on average during any given one-second

window). Of course, software video players don't generally

follow

these specs

(if they've even heard of them), so I still had some frame drops.

I generally responded by tightening the buffer spec a bit, turning off

a couple of HEVC features (surprisingly, the higher presets can make

harder-to-decode videos!), and then accepting that slower machines

(that also do not have hardware acceleration) will drop a few frames in the

most difficult scenes.

Over the course of a couple of days, I made dozens of test encodings

using different settings, looking at them both in real time and sometimes

using single-frame stepping. (Thank goodness for my 5950X!) More than once,

I'd find that something that looked acceptable on my laptop was pretty bad on

a 28" display, so a bit back and forth would be needed. There are many scenes

that have the worst possible combination of things for a video encode;

sharp edges, lots of motion, smooth gradients, motion… and actually, a bunch

of scenes look smudgy (and sometimes even blocky) in a video compression-ish

way, so having an uncompressed reference to compare to was useful.

Generally I try to stay away from applying too-specific settings; there's a

lot

of cargo culting in video encoding, and most of it is based more on hearsay

than on solid testing. I will say that I chickened out and disabled SAO,

though, based on “some guy said on a forum it's bad for high-sharpness

high-bitrate content”, so I'm not completely guilt-free here. Also,

for one scene I actually had to simply tell the encoder what to do;

I added a “zone” just before it to allocate fewer bits to that (where

it wasn't as noticeable), and then set the quality just right for the

problematic scene to not run out of bits and go super-blocky mid-sequence.

It's not perfect, but even the zones system in x265 does not allow

going past the max rate (which would be outside the given level anyway,

of course). I also added a little metadata to make sure hardware

players know the right color primaries etc.; at least one encoding on

YouTube seems to have messed this up somehow, and is a bit too bright.

Audio was easy; I just dropped in the MP3 wholesale. I didn't see the point

of encoding it down to something lower-bitrate, given that anything that can

decode 10-bit HEVC can also easily decode MP3, and it's maybe 0.1% of my

total file size. For an AV1 encode, I'd definitely transcode to Opus since

that's the WebM ecosystem for you, but this is HEVC. In a Mastroska mux,

though, not MP4 (better metadata support, for one).

All in all, I'm fairly satisfied with the result; it looks pretty good

and plays OK on my laptop's software decoding most of the time (it plays

great

if I enable

hardware acceleration), although I'm sure I've messed up

something

and it just

barfs out on someone's machine. The irony is not lost on

me that the file size ended up at 2.7 GB, after complaints that the demo

itself is a bit over 600 MB compressed. (I do believe The Legend of Sisyphus

actually defends its file size OK, although I would perhaps

personally have preferred some sort of interpolation instead of storing all

key frames separately at 30 Hz. I am less convinced about e.g. Pyrotech's 1+

GB production from the same party, though, or Fairlight's Mechasm. But as

long as party rules allow arbitrary file sizes, we'll keep seeing these

huge archives.) Even the 1440p YouTube video (in VP9) is about 1.1 GB, so perhaps I shouldn't

have been surprised, but the bits

really

start piling on quickly for this

kind of resolution and material.

If you want to look at the capture (and thus, the demo), you can find it

here

.

And now I think finally the boulder is resting at the top of the mountain for

me, after having rolled down so many times. Until the next interesting demo

comes along :-)

Značky: #Debian

](https://jmtd.net/log/zarchscape/300x-carpet_90s.jpg)