-

chevron_right

chevron_right

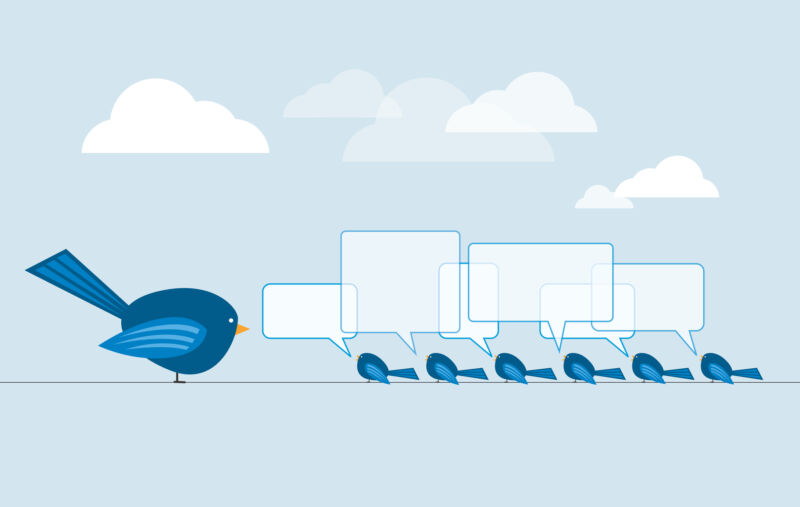

Twitter ditches free access to data, potentially hindering research

news.movim.eu / ArsTechnica · Friday, 10 February, 2023 - 16:49

Enlarge (credit: Sean Gladwell )

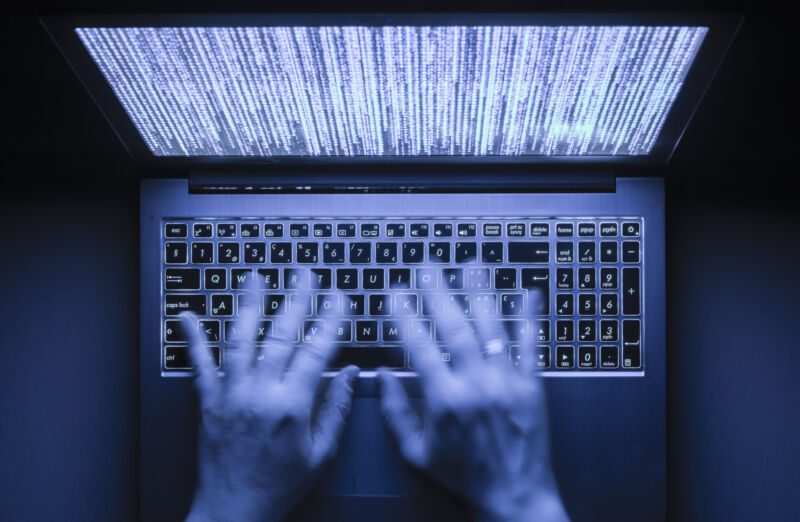

Twitter-owner Elon Musk has recently decided to close down free access to Twitter's application programming interface (API), which gives users access to tweet data. There are many different uses for the data provided by the social media platform. Third-party programs like Tweetbot—which helps users customize their feeds—have used Twitter's APIs, for example.

Experts in the field say the move could harm academic research by hindering access to data used in papers that analyze behavior on social media. When USC professor of computer science Kristina Lerman first heard about the move, she said her team started “scrambling to connect to collect the data we need for some of the projects we have going on this semester,” though the urgency subsided when more details were released, she told Ars.

Twitter will begin offering basic access to its API for $100 per month. There are few if any details released yet, but Twitter’s website shows that there are tiers of access with different tweet access limits, along with other limits on features like filtering. The higher tiers cost more.