-

chevron_right

chevron_right

Intel’s $180 Arc A580 aims for budget gaming builds, but it’s a hard sell

news.movim.eu / ArsTechnica · Tuesday, 10 October, 2023 - 17:28 · 1 minute

Enlarge / Intel's Alchemist GPU silicon, the heart of the Arc A750, A770, and now, the A580. (credit: Intel)

Intel's Arc GPUs aren't bad for what they are, but a relatively late launch and driver problems meant that the company had to curtail its ambitions quite a bit. Early leaks and rumors that suggested a GeForce RTX 3080 Ti or RTX 3070 level of performance for the top-end Arc card never panned out, and the best Arc cards can usually only compete with $300-and-under midrange GPUs from AMD and Nvidia.

Today Intel is quietly releasing another GPU into that same midrange milieu, the Arc A580 . Priced starting at $179, the card aims to compete with lower-end last-gen GPUs like the Nvidia GeForce RTX 3050 or AMD Radeon RX 6600, cards currently available for around $200 that aim to provide a solid 1080p gaming experience (though sometimes with a setting or two turned down for newer and more demanding games).

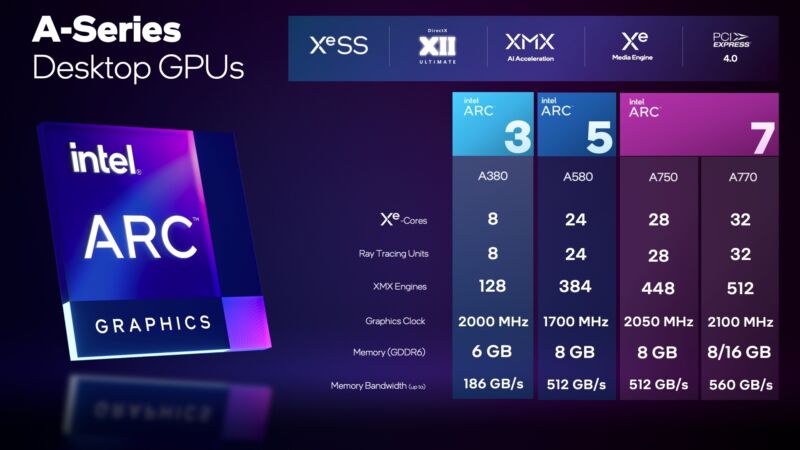

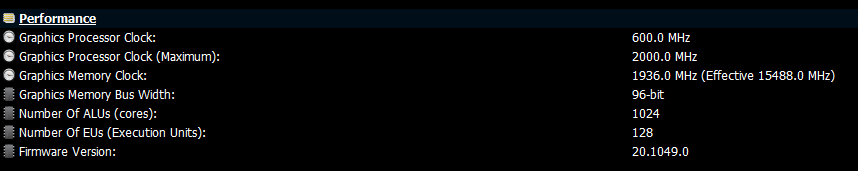

The A580 is based on the exact same Alchemist silicon as the Arc A750 and A770 , but with just 24 of the Xe graphics cores enabled, instead of 28 for the A750 and 32 for the A770. That does mean it has the exact same 256-bit memory bus as those higher-end cards, attached to a serviceable-for-the-price 8GB pool of GDDR6 RAM. Reviews from outlets like Tom's Hardware generally show the A580 beating the RTX 3050 and RX 6600 in most games, but falling a little short of the RTX 3060 and RX 7600 (to say nothing of the RTX 4060 , which beats the Arc A750 and A770 in most games).