-

chevron_right

chevron_right

GPT2-chatbot – Une IA mystère qui serait la prochaine évolution d’OpenAI (GPT-4.5 / GPT-5) ?

news.movim.eu / Korben · Tuesday, 30 April - 07:51 · 2 minutes

Vous avez entendu parler de GPT2-chatbot ?

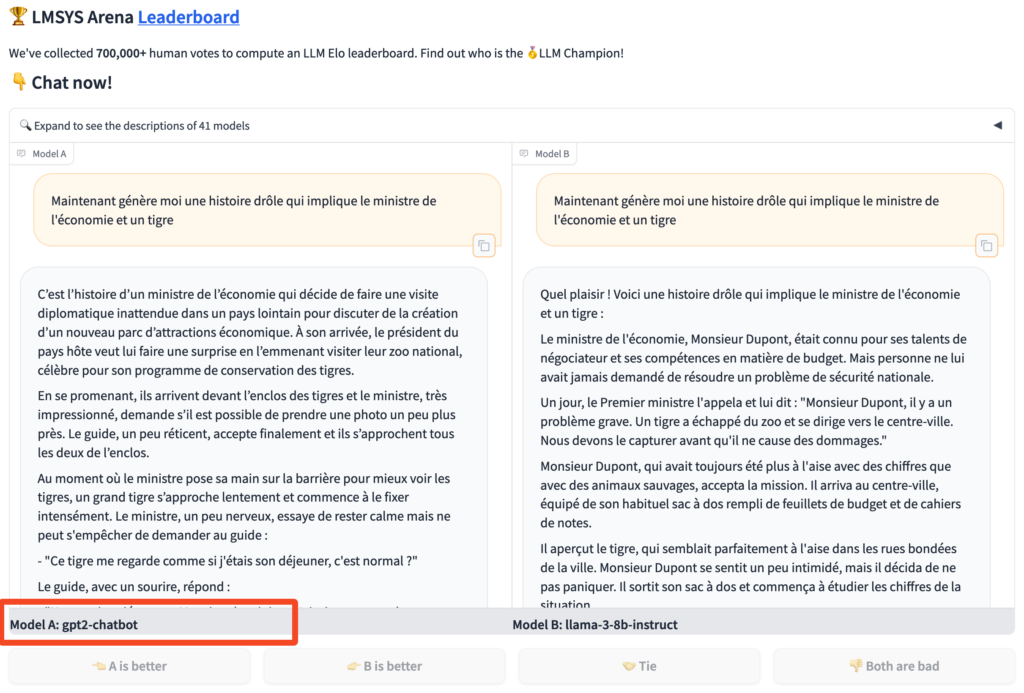

C’est un modèle de langage un peu mystérieux, accessible uniquement sur le site https://chat.lmsys.org , qui semble avoir des super pouvoirs dignes de ChatGPT . Mais attention, suspense… Personne ne sait d’où il sort !

Quand on lui pose la question, ce petit malin de GPT2-chatbot clame haut et fort qu’il est basé sur l’archi de GPT-4 sauf que voilà, ça colle pas vraiment avec son blaze GPT-2…

Du coup, les théories vont bon train. Certains pensent que c’est un coup fourré d’ OpenAI , qui l’aurait lâché en mode ninja sur le site de LMSYS pour tester un nouveau modèle en douce, possiblement GPT-4.5 ou GPT-5. D’autres imaginent que c’est LMSYS qui a bidouillé son propre chatbot et qui lui a bourré le crâne avec des données de GPT-4 pour le rendre plus savant que Wikipédia.

Moi, je pencherais plutôt pour la première hypothèse. Pourquoi ? Et bien ce GPT2-chatbot partage des caractéristiques bien spécifiques avec les modèles d’OpenAI, comme l’utilisation du tokenizer maison « tiktoken » ou encore une sensibilité toute particulière aux prompts malicieux .

Au travers de mes propres tests réalisés hier soir, j’ai pu constater que les différences entre GPT2-chatbot et GPT-4 étaient assez subtiles. Les textes générés par GPT2-chatbot sont effectivement mieux construits et de meilleure qualité. Lorsque j’ai demandé à Claude (un autre assistant IA) de comparer des textes produits par les deux modèles, c’est systématiquement celui de GPT2-chatbot qui ressortait gagnant.

Ma théorie personnelle est donc qu’il s’agit bien d’une nouvelle version améliorée de ChatGPT mais je ne pense pas qu’on soit déjà sur du GPT-5. Plutôt du GPT-4.5 grand maximum car les progrès, bien réels, ne sont pas non plus renversants. C’est plus une évolution subtile qu’une révolution.

Les internautes ont aussi leurs hypothèses . Certains imaginent que GPT2-chatbot pourrait en fait être un petit modèle comme GPT-2 (d’où son nom) mais boosté avec des techniques avancées comme Q* ou des agents multiples pour atteindre le niveau de GPT-4. D’autres pensent qu’OpenAI teste en secret une nouvelle architecture ou un nouvel algorithme d’entraînement révolutionnaire permettant d’obtenir les performances de GPT-4 avec un modèle compact. Les plus optimistes voient même en GPT2-chatbot les prémices de l’AGI !

Prêt à tester les talents cachés de GPT2-chatbot ?

Alors direction https://chat.lmsys.org , sélectionnez « gpt2-chatbot », cliquez sur « Chat » et c’est parti mon kiki !

Vous avez droit à 8 messages gratos en mode « tchatche directe » et après, faut passer en mode « Battle » pour continuer à jouer. Un petit conseil : pensez à repartir d’une page blanche en cliquant sur « New Round » à chaque fois que vous changez de sujet, sinon il risque de perdre le fil.

On verra bien dans quelques semaines quelle théorie sortira gagnante de ces discussions.