-

chevron_right

chevron_right

When it comes to advanced math, ChatGPT is no star student

news.movim.eu / ArsTechnica · Saturday, 20 May, 2023 - 16:00 · 1 minute

Enlarge (credit: Peter Dazeley )

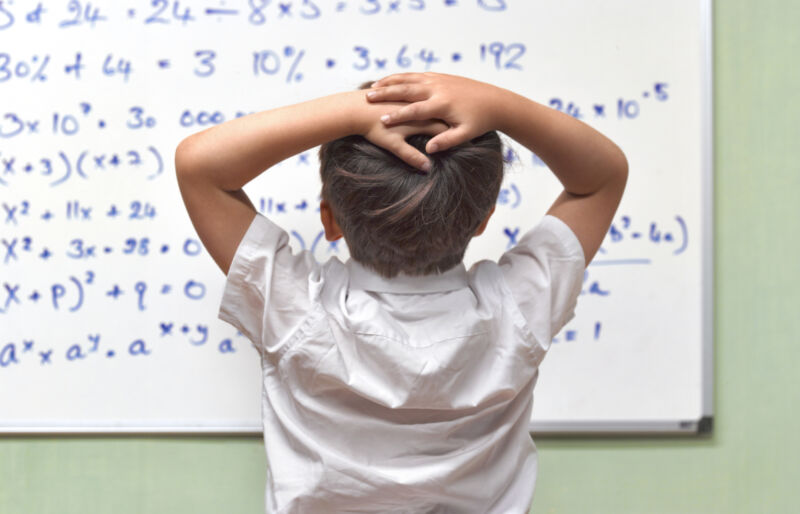

While learning high-level mathematics is no easy feat, teaching math concepts can often be just as tricky. That may be why many teachers are turning to ChatGPT for help. According to a recent Forbes article , 51 percent of teachers surveyed stated that they had used ChatGPT to help teach, with 10 percent using it daily. ChatGPT can help relay technical information in more basic terms, but it may not always provide the correct solution, especially for upper-level math.

An international team of researchers tested what the software could manage by providing the generative AI program with challenging graduate-level mathematics questions. While ChatGPT failed on a significant number of them, its correct answers suggested that it could be useful for math researchers and teachers as a type of specialized search engine.

Portraying ChatGPT’s math muscles

The media tends to portray ChatGPT’s mathematical intelligence as either brilliant or incompetent. “Only the extremes have been emphasized,” explained Frieder Simon , a University of Oxford PhD candidate and the study’s lead author. For example, ChatGPT aced Psychology Today’s Verbal-Linguistic Intelligence IQ Test, scoring 147 points, but failed miserably on Accounting Today’s CPA exam. “There’s a middle [road] for some use cases; ChatGPT is performing pretty well [for some students and educators], but for others, not so much,” Simon elaborated.